Emergent abilities in Large Language Models

Emergence And Reasoning In Large Language Models (2022 Paper)

ArXiv link: https://arxiv.org/abs/2206.07682

Youtube video link: https://www.youtube.com/watch?v=0Z1ZwY2K2-M

This paper was written by Google employee Jason Wei et. al. It argues and shows evidence of emergent abilities of LLMs as they scale in size.

One of the definitions of Emergence is: "A qualititive change that arises from quantitative changes"

There are different types of emergence that appear in LLMs. Here are some:

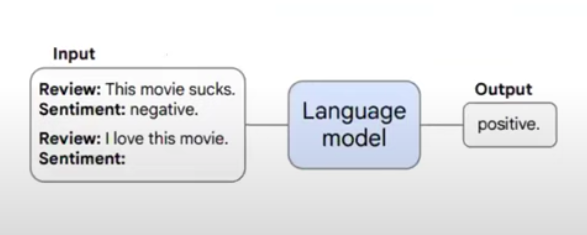

- Emergence in few-shot prompting: It works by appending a few examples as input-output pairs in the prompt, then ask the model for your specific input.

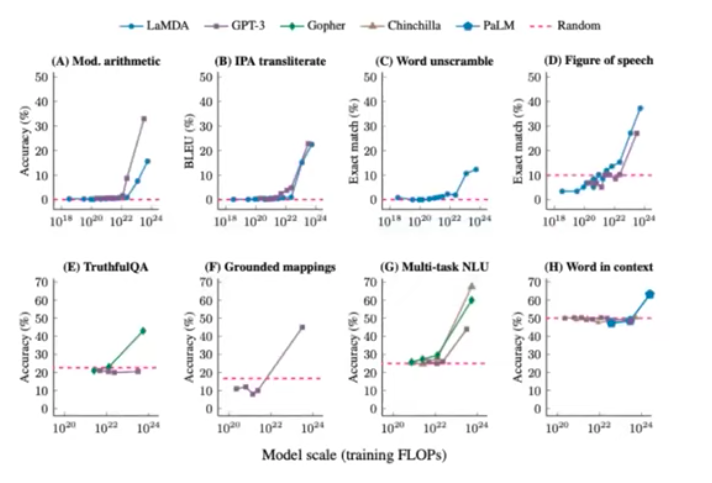

Below is another graph that shows how scaling induces emergent abilities across many tasks and many different models. X-axis is the model's scale. Y-axis is the accuracy for that specific task.

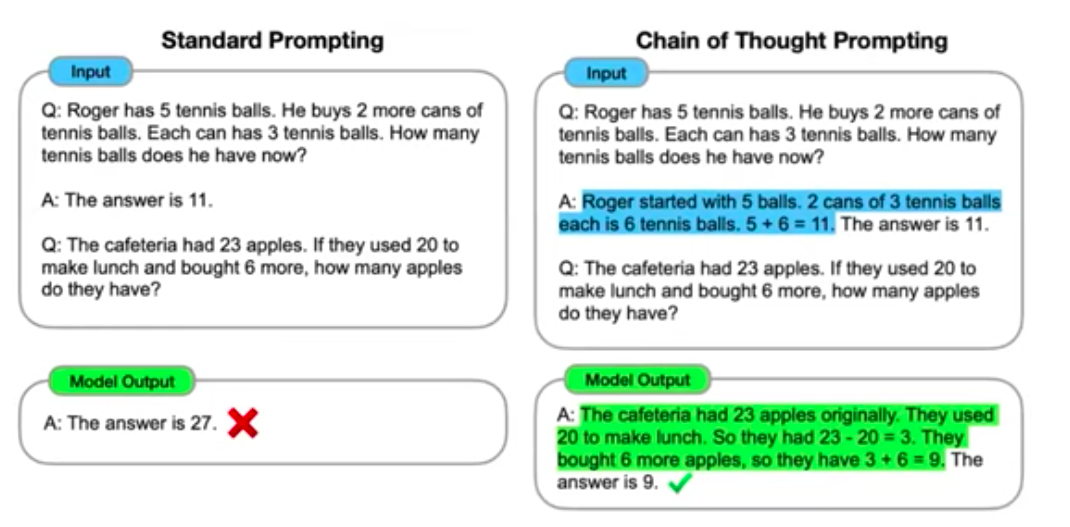

- Chain-of-thought prompting: In this type of prompting, you don't just show input-output examples. You also include the rationale or chain of thought that leads to the desired answer. The model learns to mimic that chain of thought, and apply it to other problems. Below is an example of chain-of-thought prompting vs. standard prompting:

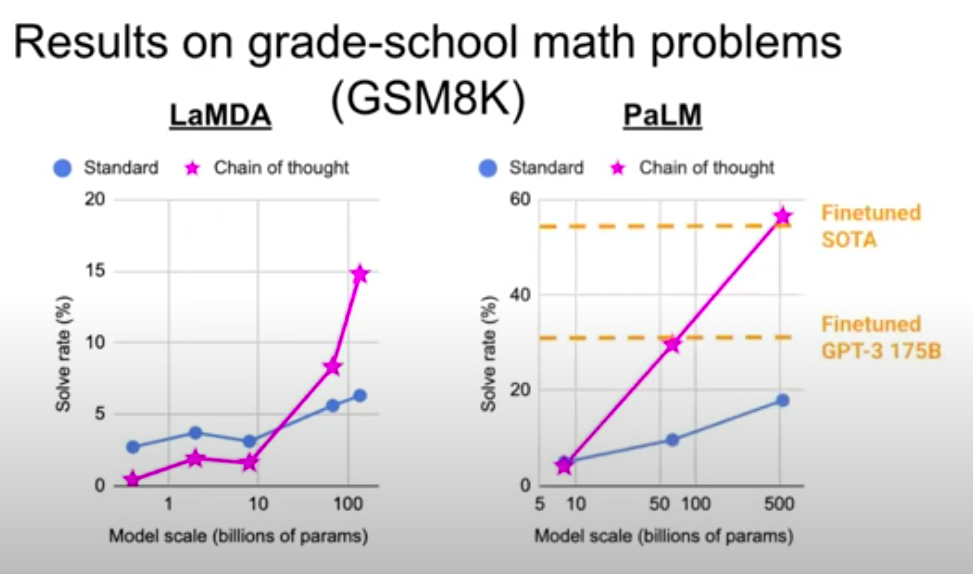

Here are some results for using chain-of-thought prompting: